Data Analysis

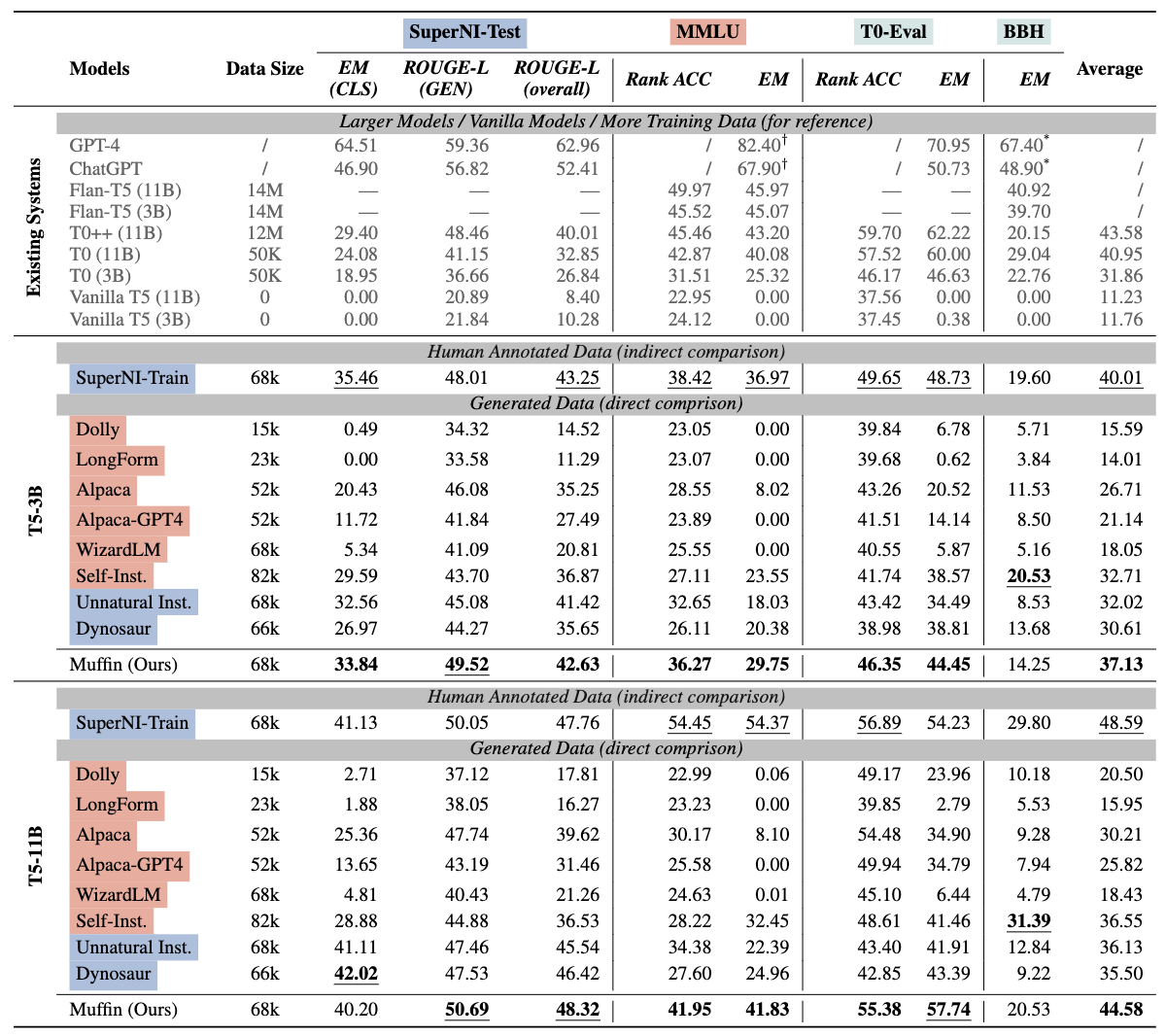

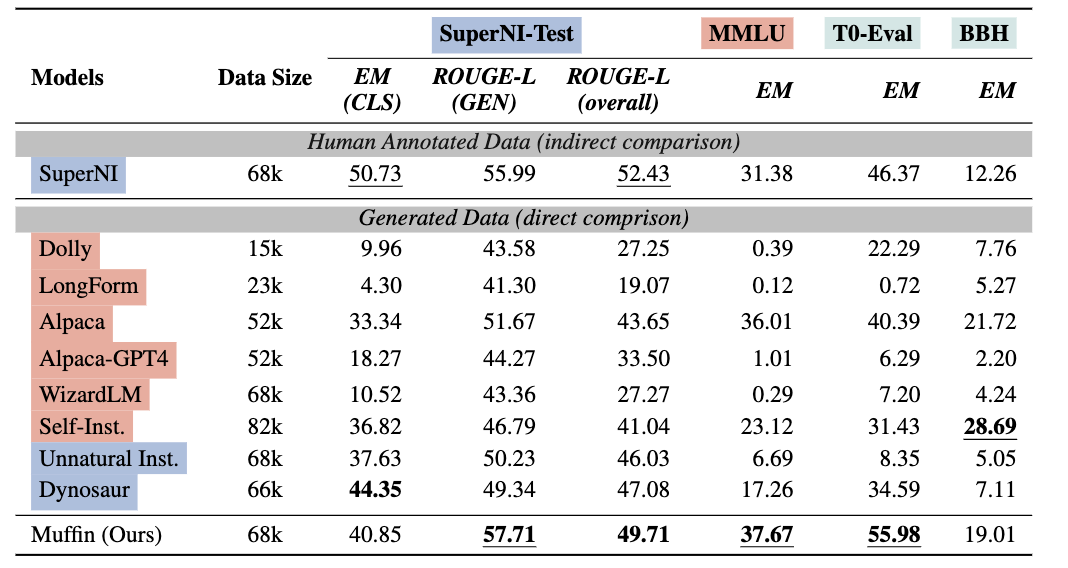

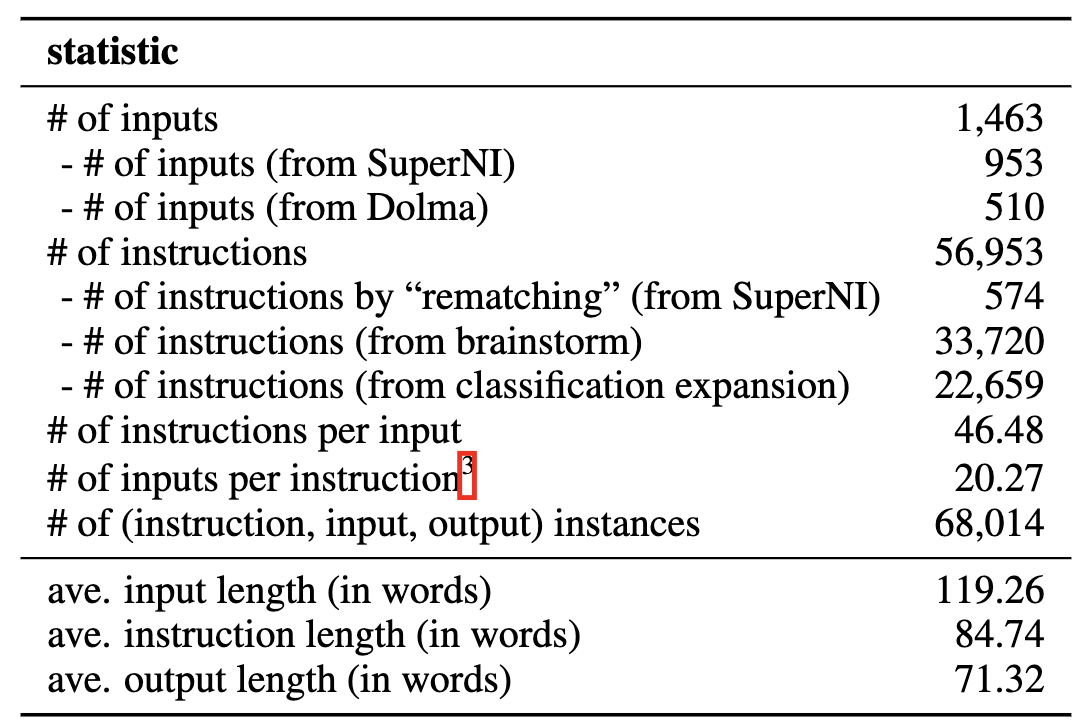

Table 1: Statistics of MUFFIN.

Table 1 lists the detailed statistics of the 68K (instruction, input, output) instances of MUFFIN. It is worth mentioning that some inputs will share the same instructions because of our "instruction rematching" mechanism.

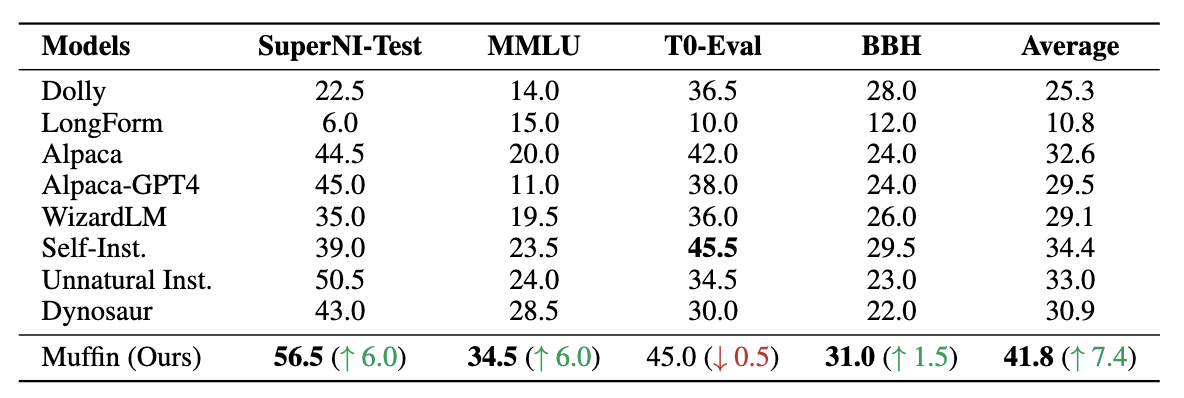

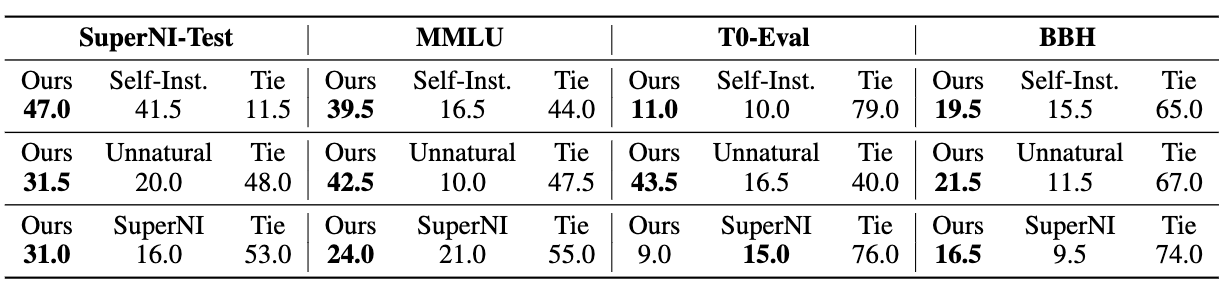

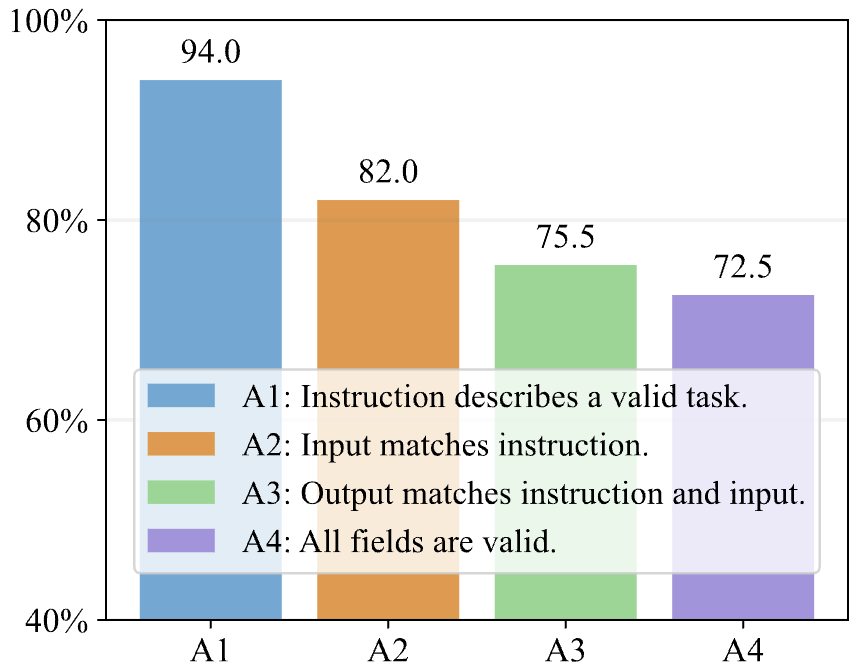

Figure 3: Human evaluation on the data quality.

To assess data quality, we randomly selected 200 instances from our dataset (200 random inputs, 1 random instruction per input). Two NLP graduate students were involved in the evaluation. Following previous works, each annotator answered three questions for each instance: i) determining if the instruction describes a valid task (A1) when only the instruction is provided, ii) assessing if the instruction appropriately matches the input when only the instruction-input pair is presented (A2), and iii) evaluating if the output correctly responds to the instruction and input (A3). We also recorded instances where all three fields were correct (A4). Figure 3 shows the correct ratio for each question.