AAAR-1.0: Assessing AI's Potential to Assist Research

AAAR-1.0: Assessing AI's Potential to Assist Research

{renze.lou, wenpeng}@psu.edu

AAAR-1.0: Assessing AI's Potential to Assist Research

AAAR-1.0: Assessing AI's Potential to Assist Research{renze.lou, wenpeng}@psu.edu

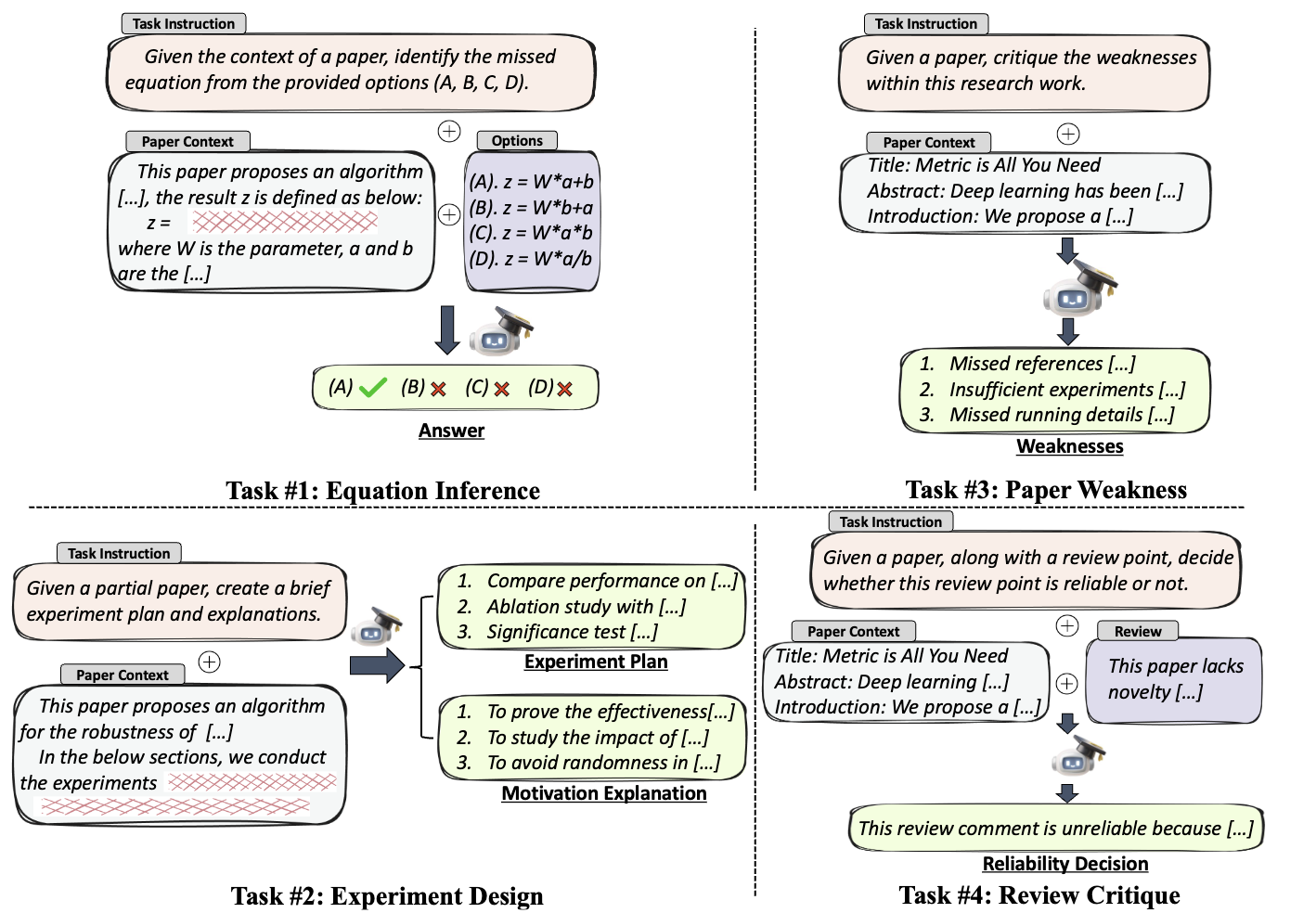

We introduce AAAR-1.0 ("1.0" denotes this work is a beginning of a series), a benchmark dataset designed to evaluate LLM performance in four fundamental, expertise-intensive research tasks, which most AI and Machine Learning researchers encounter daily:

(i) EquationInference, assessing the correctness of equations based on the contextual information in paper submissions;

(ii) ExperimentDesign, designing experiments to validate research ideas and solutions;

(iii) PaperWeakness, identifying weaknesses in paper submissions;

(iv) ReviewCritique, identifying each segment in human reviews is deficient or not.

To ensure data quality, senior AI researchers with extensive domain expertise perform data annotation for AAAR-1.0, followed by rigorous multi-round data examination and filtering.

The proposd AAAR-1.0 becnhmark is:(a) Challenging: All four tasks require models to possess strong domain knowledge covering various cutting-edge research findings, as well as expert-level research experience, to the extent that even humans need substantial research accumulation to tackle the tasks we designed.

(b) Transparent & Quantitative: Tasks here are singular, stand-alone challenges (with clear input and output expectations) rather than a complicated task chain. Benefiting from the proposed task-specific metrics, it provides a more transparent and quantitative assessment of the model's research outputs.

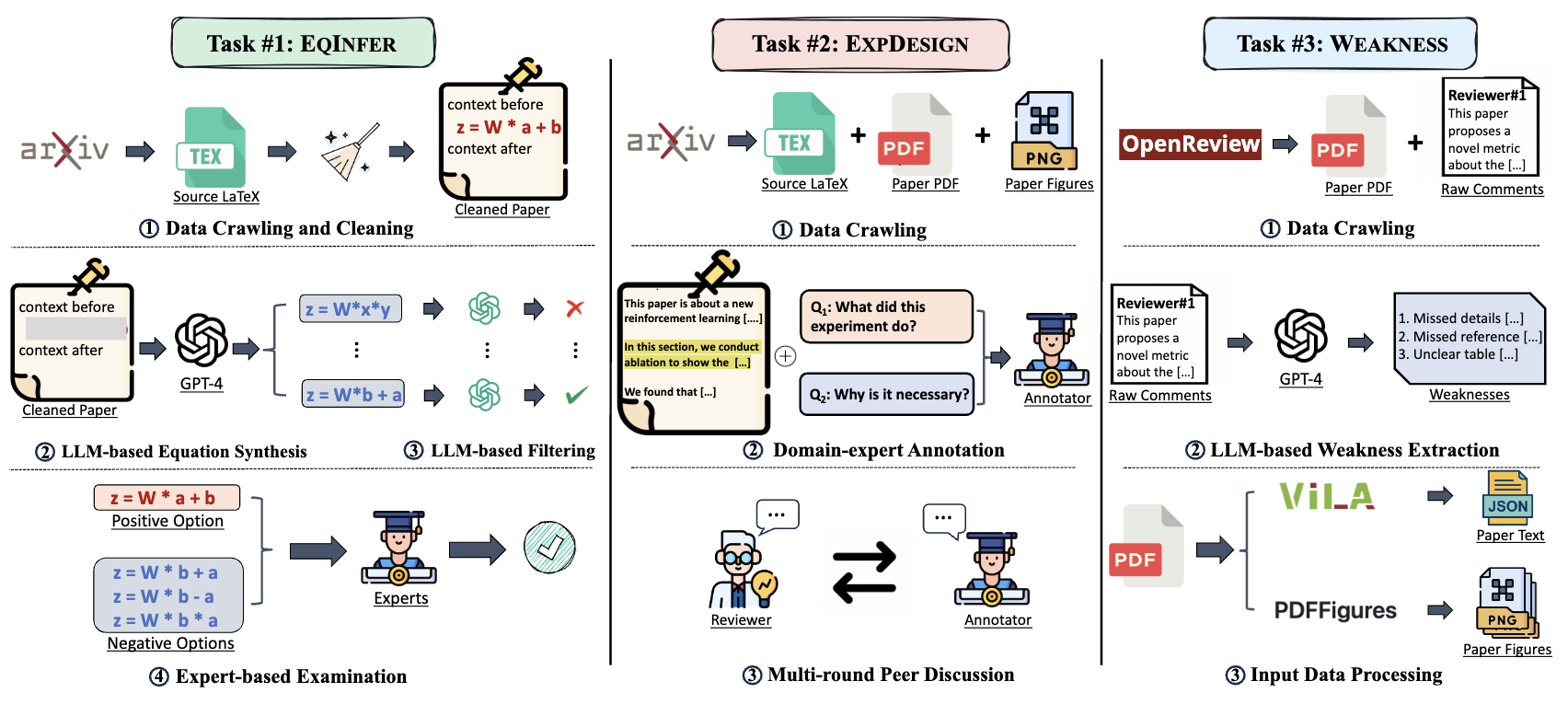

(i) EquationInference: we first crawl the source LaTeX data from arXiv and extract all the human-written equations from each paper, which are used as positive options. Then, we employ LLMs to synthesize more equations based on the paper contexts, namely the negative options. Afterwards, LLM-based filtering ensures the negative options are contextually appropriate. At last, the manual examination of human experts further enhances the quality of the classification instances.

(ii) ExperimentDesign: we crawl all the source data from arXiv, including LaTeX code, PDF, and image files. After that, we ask experts to annotate the experiment plan and the corresponding explanations for each paper, followed by a subsequent multi-round peer discussion steps to improve the annotation accuracy.

(iii) PaperWeakness: since under-review drafts are required for this task; we crawl paper PDFs from the OpenReview website instead of arXiv. With the help of LLMs, we extract all the weaknesses from the raw comments while keeping the reviewers' original words. As not all the under-review papers have arXiv sources, we use parsing tools to get the final input text and images from PDFs.

(iv) ReviewCritique: we reuse the data from our recent work (Du et al., 2024), where we crawled papers' initial submissions along with their reviews from OpenReview and employed more than 40 AI research experts to label each review segment (i.e., deficient or not), with detailed human explanations. In total, there were 100 papers with 380 human reviews. Each review was divided into sentence-level segments, resulting in 11,376 review segments (viewpoints).

Since AAAR-1.0 introduces expertise-demanding research tasks with language outputs, semantic-based metrics are necessary. We propose several task-specific metrics for quantitatively assessing LLM research outputs.

Please refer to our paper for the formal mathematical definitions of these metrics.

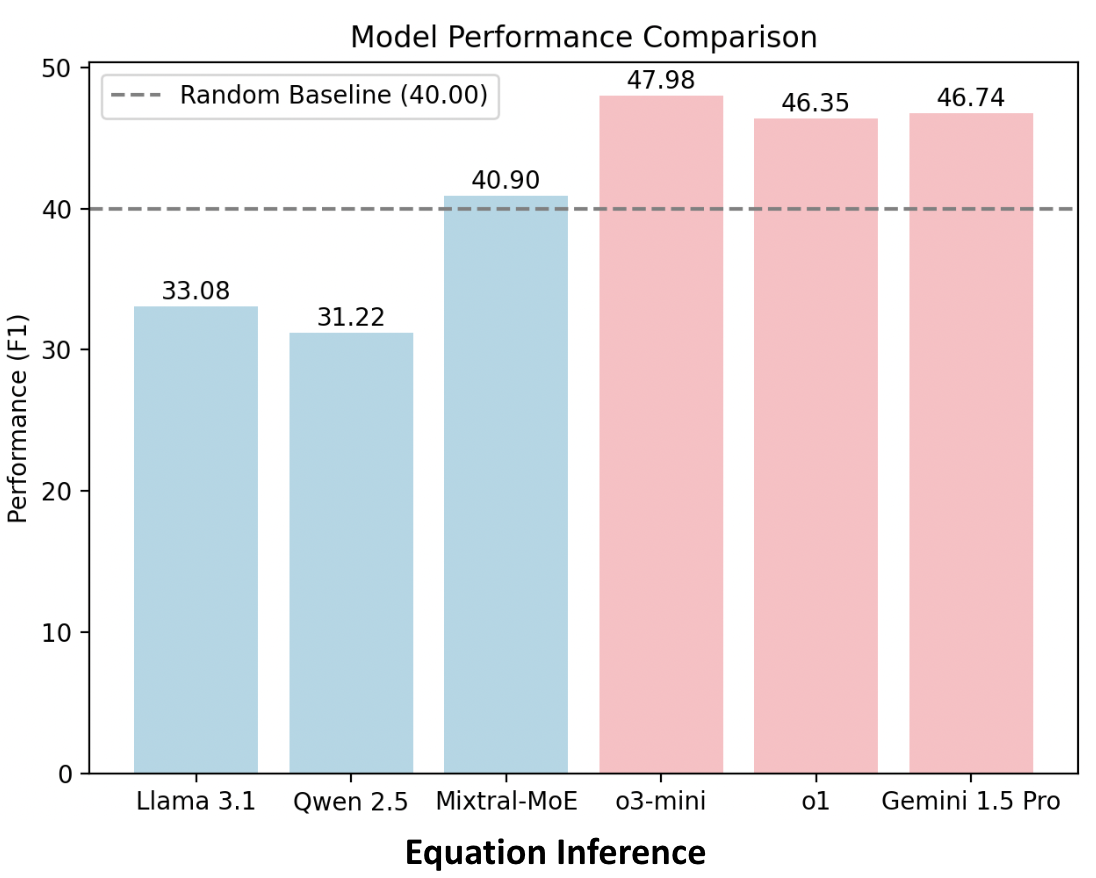

Figure 3 shows the main results on EquationInference. Firstly, a simple baseline that predicts all equations as positive achieves 40% F1, while nearly all open-source LLMs even cannot beat this naive baseline. Meanwhile, compared to the random baseline, the performance superiority of the strong close-source LLMs is not significant, the best LLM on this task only obtains 47.98%. The generally high recall with low precision indicates the LLMs are genrally behaving similarly as the random baseline, i.e., simply predicting any given equations as correct instead of truly reasoning over the paper context.

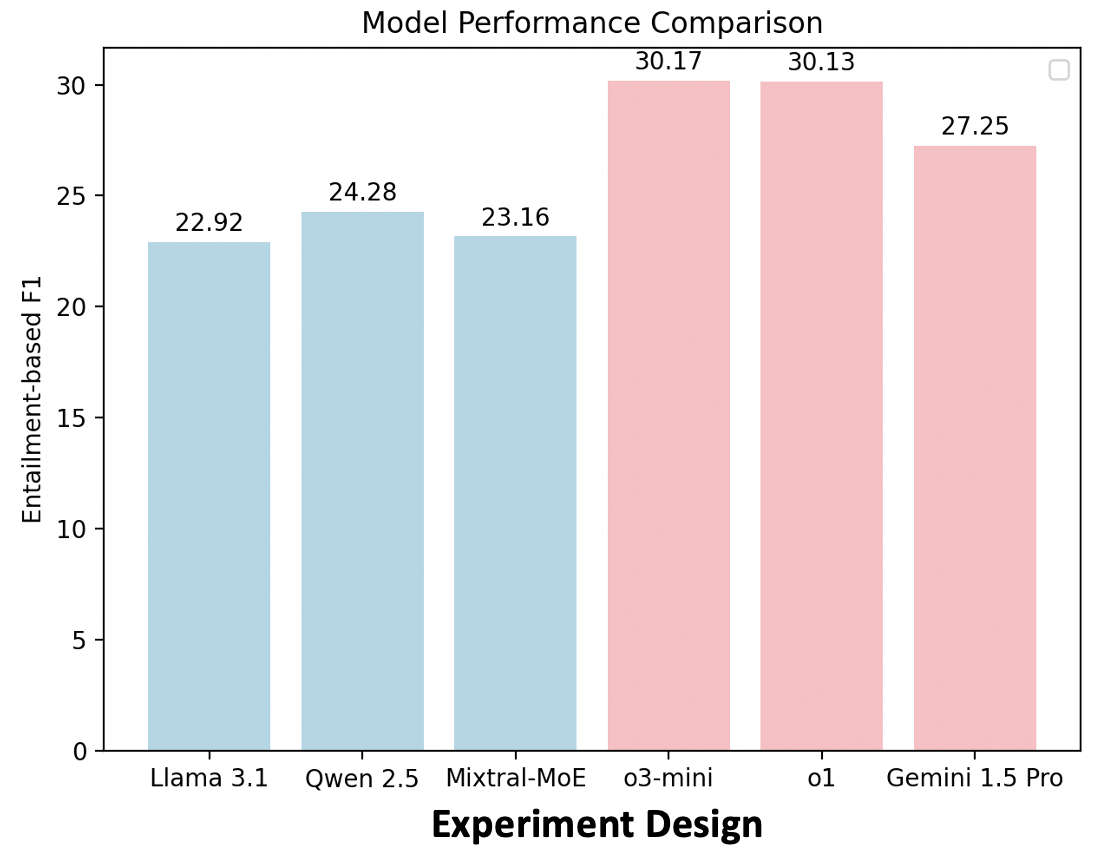

Figure 4 shows the main results on ExperimentDesign. For the experiment design, the closed-source LLMs generally out-perform open-source LLMs. However, the score values of all LLMs are relatively low, implying the LLMs consistently miss ground-truth experiments from the origin paper (low recall), and they tend to generate more novel experiments that didn’t show in the origin paper (low precision).

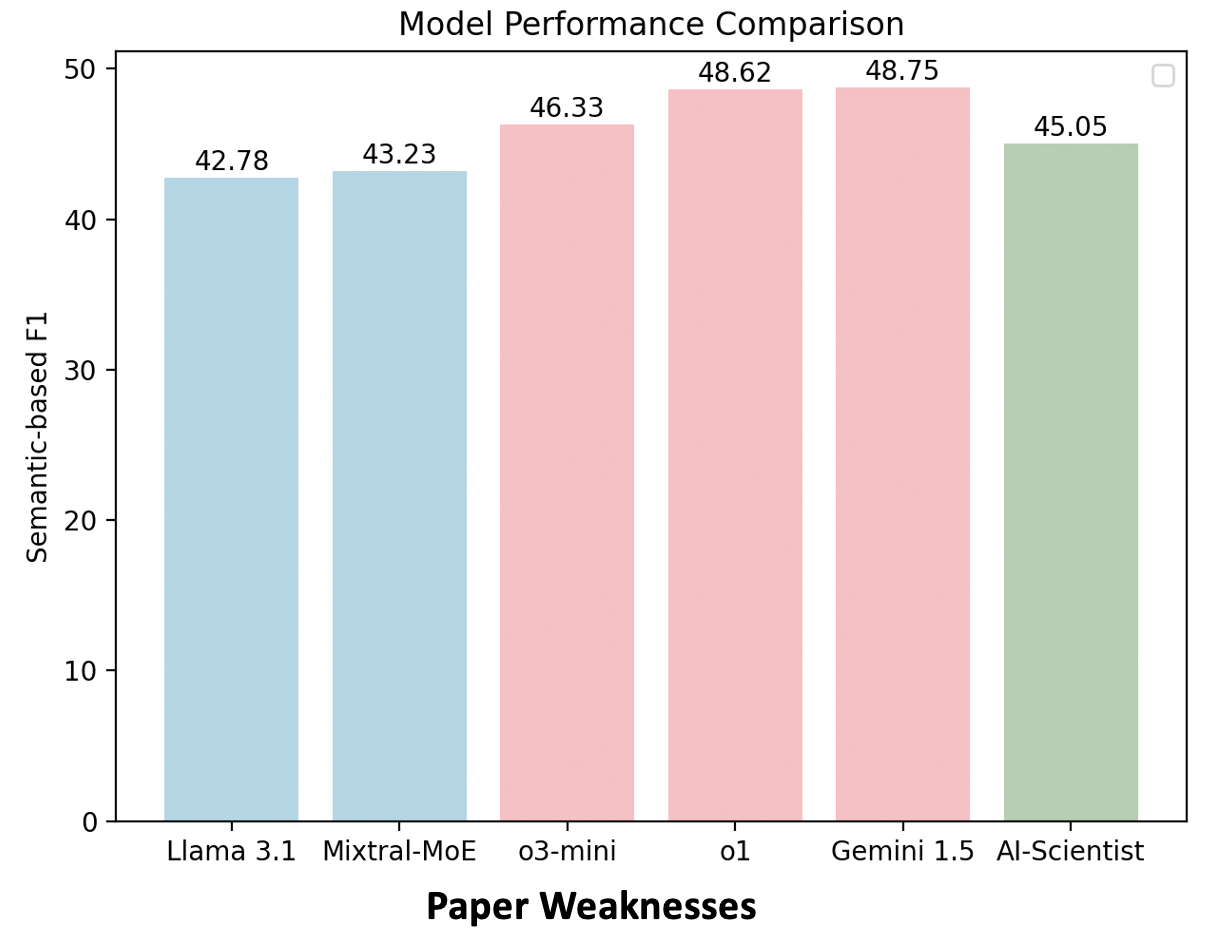

Figure 5 shows the main results on PaperWeakness, where the closed-source LLMs' overall performances are generally superior to the results of open-source LLMs. However, there is still a considerable gap in the weakness diversity between the LLMs and human experts. Compared with human review, most LLM-generated weaknesses are vague and lack the necessary knowledge about some frontier research works. Surprisingly, AI-scientist performs worse than backbone GPT-4o, which suggests the challenge of PaperWeakness, i.e., simply adopting popular prompting techniques cannot well address this task.

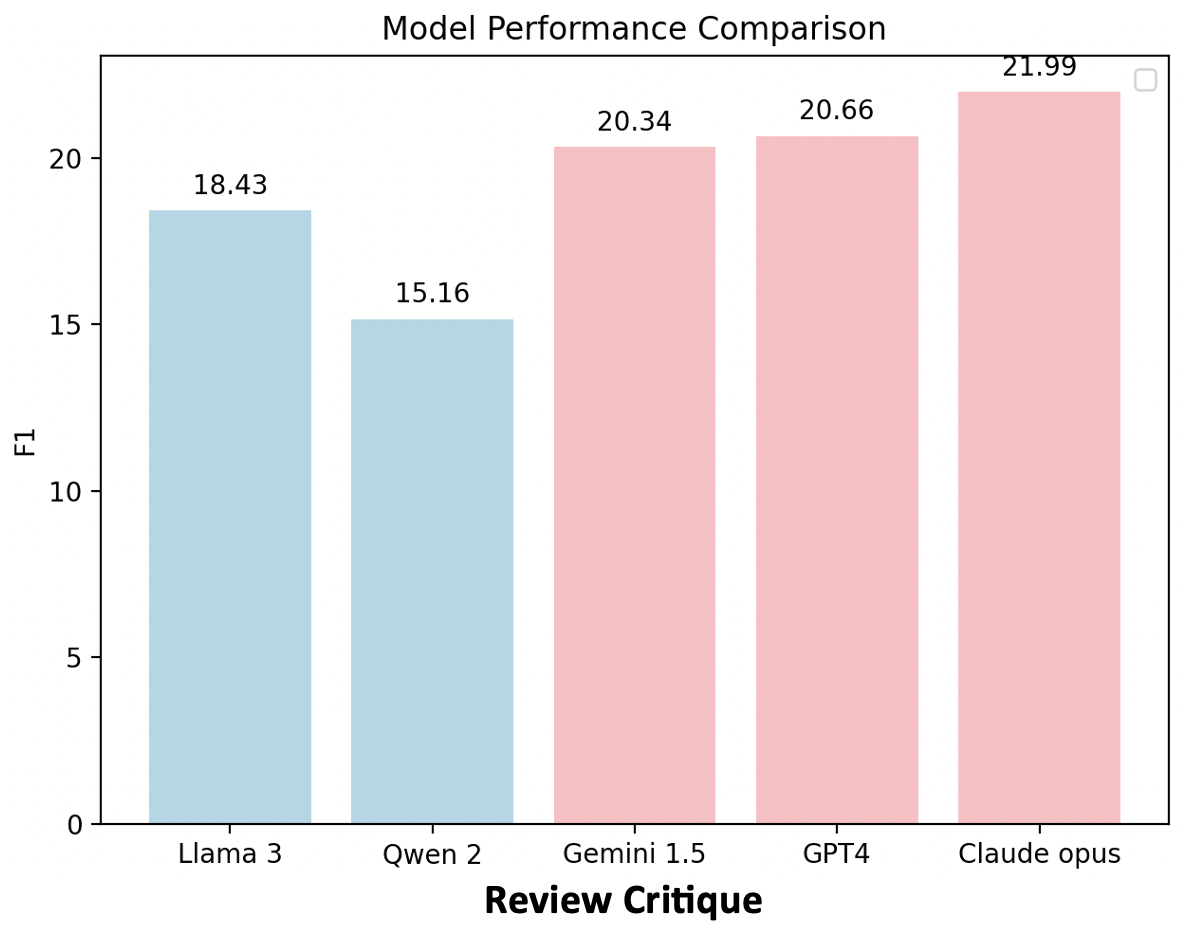

We put the results of ReviewCritique in Figure 6. Closed-source models (GPT-4, Claude Opus, and Gemini 1.5) generally outperform open-source models (Llama3-8B and 70B, Qwen2-72B) in F1 score. Claude Opus achieves the highest F1 scores, with GPT-4 and Gemini 1.5 performing slightly worse. Despite the superior performance of the closed-source models, their F1 scores remain relatively low even with different prompt strategies, highlighting the challenges LLMs face in such expertise-intensive tasks and emphasizing the importance of human expertise in the meta-reviewing process.

@article{Lou2024AAAR,

title={{AAAR-1.0}: Assessing AI's Potential to Assist Research},

author={Renze Lou and Hanzi Xu and Sijia Wang and Jiangshu Du and Ryo Kamoi and Xiaoxin Lu and Jian Xie and Yuxuan Sun and Yusen Zhang and Jihyun Janice Ahn and Hongchao Fang and Zhuoyang Zou and Wenchao Ma and Xi Li and Kai Zhang and Congying Xia and Lifu Huang and Wenpeng Yin},

journal={arXiv preprint arXiv:2410.22394},

year={2024}

}